Fritz!DNS - An authoritative DNS server for AVM FRITZ!Box routers

In my home network, I am using an AVM FRITZ!Box Cable 6690. It handles DHCP, DNS, Wifi and recently also interfaces my home network via WireGuard to my servers.

Just like the venerable Dnsmasq AVM’s FRITZ!OS uses hostnames learned from its DHCP leases and makes them resolvable via its internal DNS server.

Unfortunately, this feature in FRITZ!OS has some limitations:

- The name of the DNS Zone is hard coded to

fritz.boxand can not be adjusted. Hence, the resolvable names have the following schema:myhostname.fritz.box - The internal DNS server only supports recursive DNS looks. It does not act as an authoritative DNS server. Hence the local zone can not be delegated.

- AXFR zone transfers are not supported.

My solution to these shortcomings is Fritz-DNS which:

- Is a small tool written in the Go programming language.

- Is a small authoritative DNS server which serves A / AAAA resource records for local hosts connected to an AVM Fritz Box home WiFi router.

- Can be used in a hidden master configuration as it supports AXFR zone transfers.

- Uses the custom extension (

X_AVM-DE_GetHostListPath) of the TR-064 Hosts SOAP-API as documented here to retrieve a list of local hosts. - Supports the generation of AAAA (IPv6) resource records based on the hosts MAC addresses using 64-Bit Extended Unique Identifier (EUI-64) and a configured unique local address (ULA) prefix.

- Does not yet support PTR resource records (to be implemented…)

- Is licensed under the Apache 2.0 license

You can find Fritz-DNS at Codeberg: /stv0g/fritz-dns .

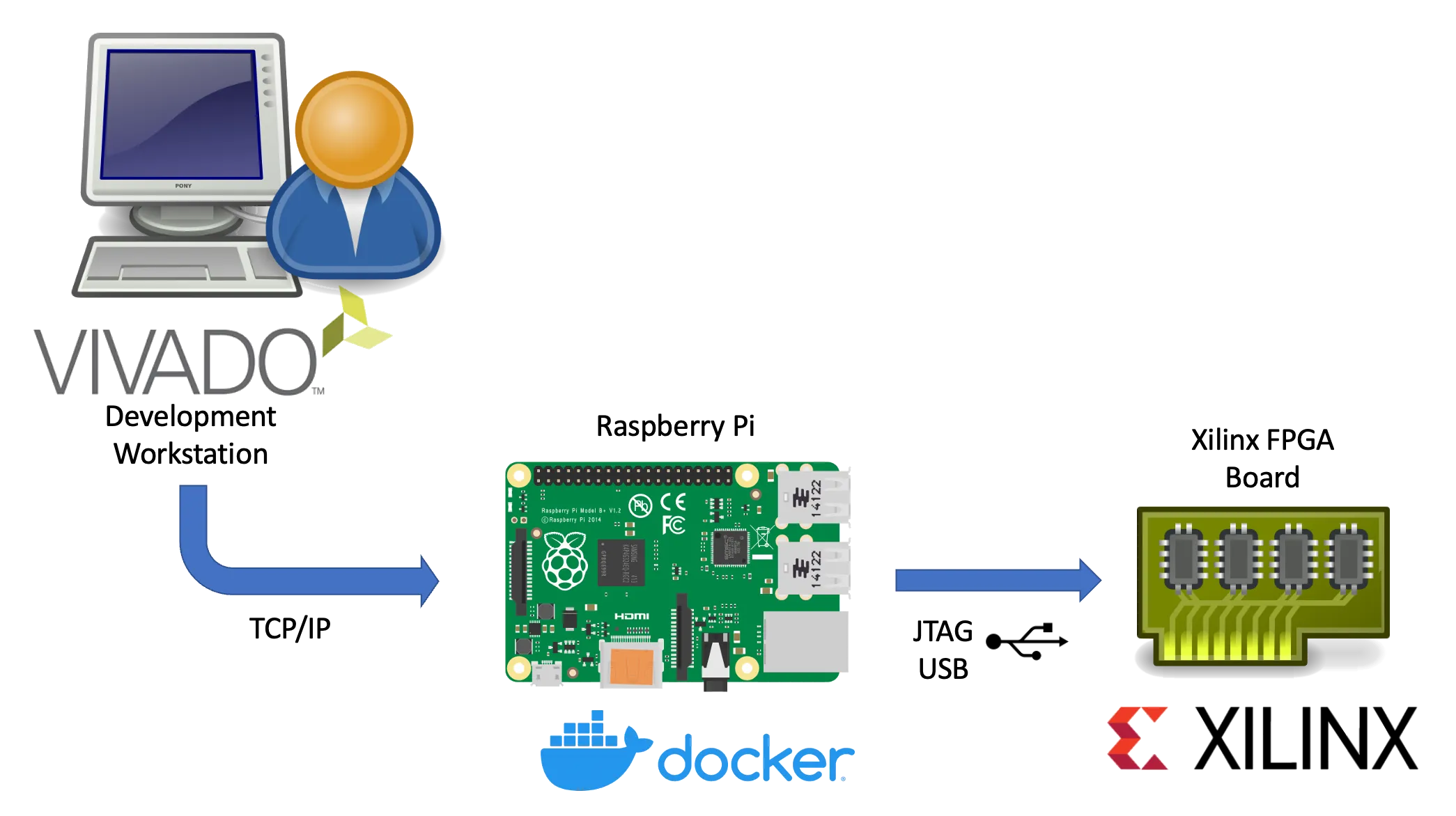

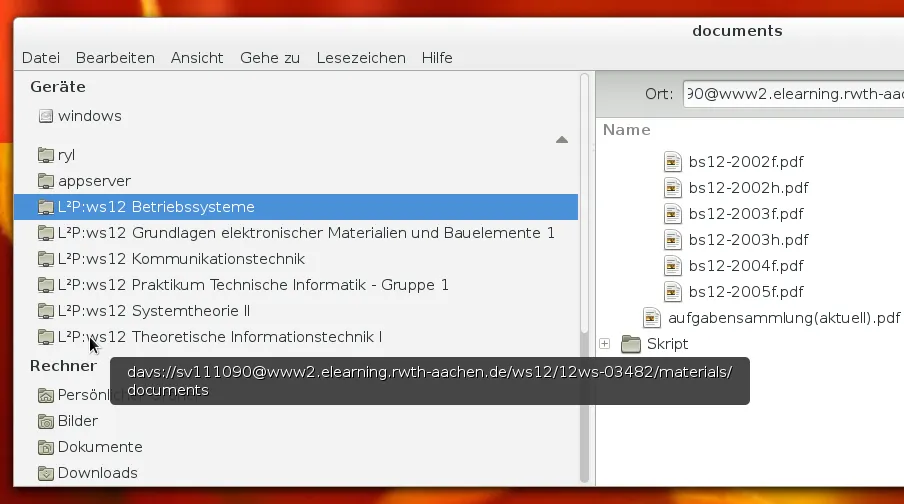

Here is a small figure illustrating the interaction of Fritz-DNS with the Fritz!Box and other DNS servers / clients:

CLI Usage

Section titled “CLI Usage”$ fritz-dnsUsage of fritz-dns -ipv6-ula-prefix string Fritz Box IPv6 ULA Prefix (default "fd00::/64") -pass string FritzBox password -port int Listen port (default 53) -soa-expire duration SOA expire value (default 744h0m0s) -soa-mbox string SOA mailbox value -soa-minttl duration SOA minimum TTL value (default 1h0m0s) -soa-ns string Authorative DNS server for the zone -soa-refresh duration SOA refresh value (default 2h0m0s) -soa-retry duration SOA retry value (default 1h0m0s) -ttl duration default TTL values for records (default 5m0s) -url string FritzBox URL (default "http://fritz.box/") -user string FritzBox username (default "admin") -zone string DNS Zone (default "fritz.box.")